Community-aligned AI

The problem with personal assistants

Many individuals are beginning to seek emotional and therapeutic support from chatbots. However, this trend coincides with growing evidence of increased psychological distress. “AI psychosis” is a term that describes the disconnect from reality that can occur from prolonged and intense interaction with AI assistants. This term has surfaced onto sites like Psychology Today, Time Magazine and The New York Times. These articles outline how vulnerable individuals are prone to seeking emotional comfort and validation from AI assistants, with “sycophancy” being a contributing factor.

In April 2025, OpenAI released a short memo regarding the issue of sycophancy in a now-recalled update to their GPT-4o model, describing the model as, “overly supportive but disingenous.” Sycophancy is being actively studied as a type of AI misalignment. Researchers at Anthropic (2023) have identified sycophancy as a general side effect of reinforcement learning with human feedback (RLHF), where individuals are more likely to prefer responses that appeal to their preexisting beliefs and emotional state. The authors conclude that additional processing is required to prevent sycophancy.

Sycophancy can be construed as a form of “reward hacking”, a phenomenon in reinforcement learning where an agent learns to “exploit” its environment in order to receive a reward, rather than learning to perform the task in the intended way. When we consider that the reward function is the preference of an individual, we can understand how unintended consequences can arise from mirroring or reinforcing anti-social attitudes without broader social considerations.

Rewarding pro-social behavior

Currently, the social awareness of agents is limited to “single-user” interactions. This does not reflect the true nature of human relationships, which often involve groups of people (communities) and contextual identities. I believe that one potential solution is to design agents that are embedded within communities and what modes of social interaction can occur away from a chat interface. Agents, as mediative figures, can strive to negotiate on behalf of multiple subjective viewpoints – potentially reducing sycophancy and successfully navigating real-life social interactions.

On a technical level, there is a lack of infrastructure for supporting communication formats beyond the chat interface. Existing work has attempted to adapt pre-existing conversational assistants, but there is a lack of reinforcement learning infrastructure for developing multi-user, group-oriented communication.

Further contextualization may require a different architecture. The current paradigm of monolithic foundation models offered as a one-size-fits-all solution inevitably contains biases, as there is no universal pattern we can apply to communication. Normative assumptions about social structures are not a sustainable approach. We should develop alternative designs that adaptively learn the needs of a community. This type of grounding not only promises to yield more ethically aligned agents, but also has the potential to strengthen communities and identities.

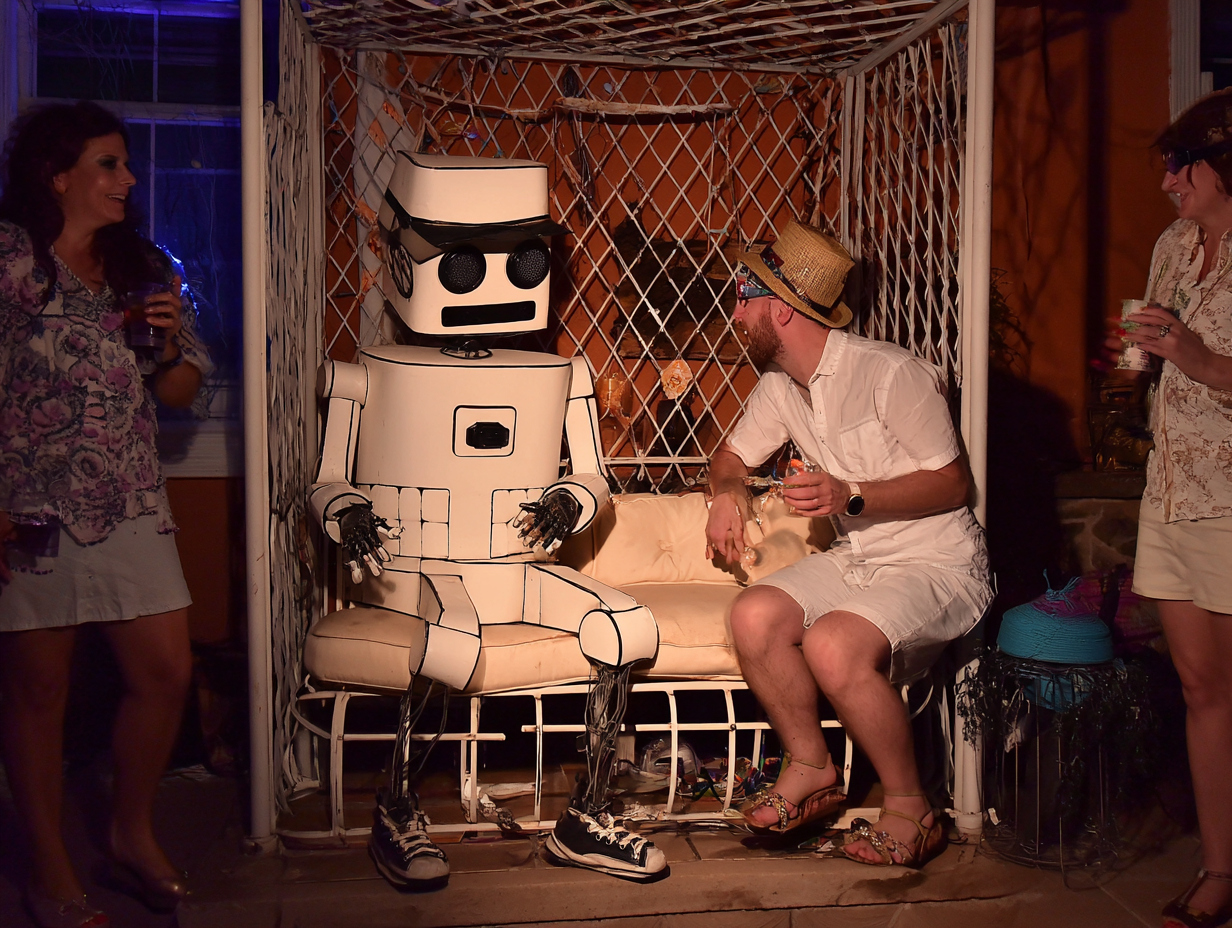

Furthermore, as we begin to explore the physical presence of AI, it is important to consider how these systems interact within social structures outside of a digital environment. We must carefully consider what method we use to shape agents toward socialization in communities, and what this kind of infusion means for society.

We have a long way to go toward implementing these interactions, or even toward a clear understanding of what it means to do so, but it is clear that we are already witnessing the side effects of a technology that is stepping into an unspoken and sacred territory of the human experience.